Every time a company trials AI, someone inevitably says, “What if it makes a mistake?”

It’s a fair question.

Nobody wants an algorithm approving dodgy loans or misclassifying cancer scans. But let’s be honest: humans make those mistakes too, just with more confidence and less documentation.

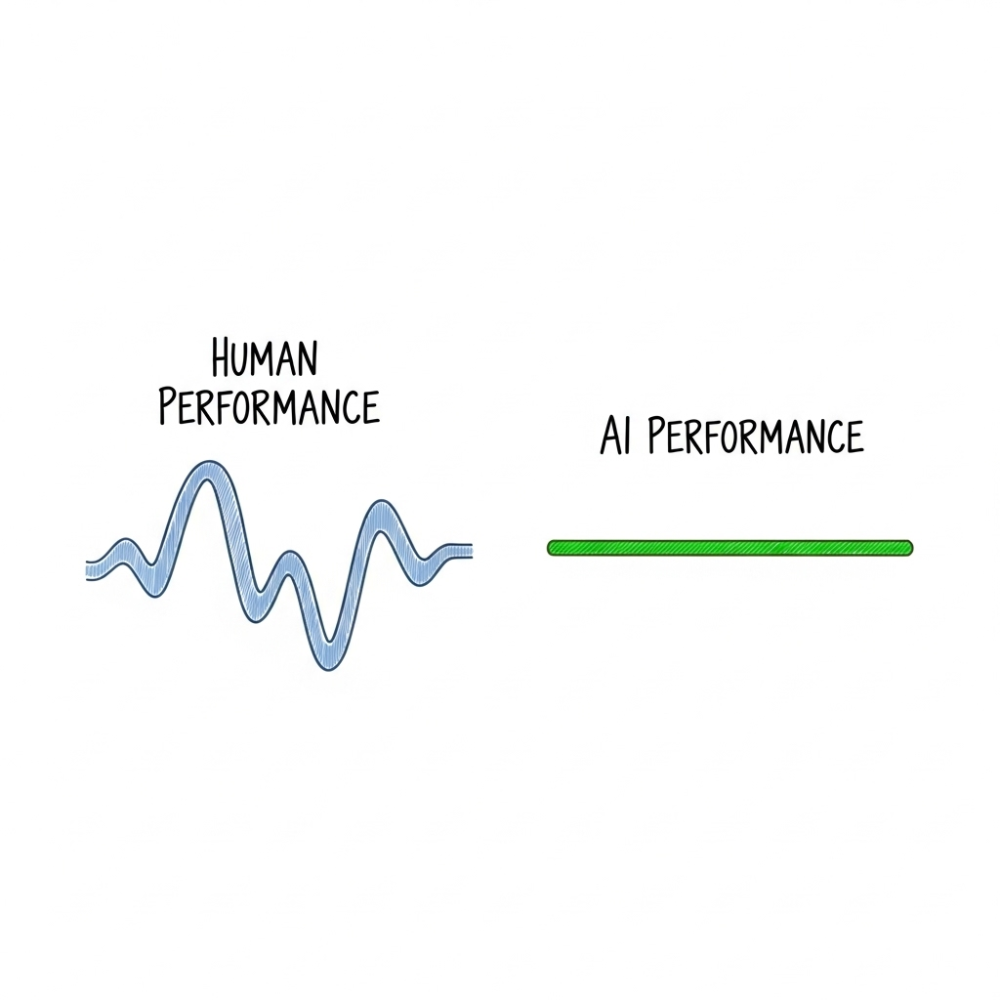

Most people operate in the subconscious belief humans operate at 100% accuracy, when in reality, even elite professionals sit closer to 90 to 95%.

People make errors because, by our very nature, we’re not robots. We’re able to get emotional, tired, biased, or distracted by the office birthday cake.

This makes humans “human”, but also makes us objectively terrible at making rational decisions with perfect consistency.

Which leads to my position. AI doesn’t have to be perfect to outperform us. It just needs to be less wrong more often.

The Trouble With the Human Factor

Every business I’ve worked with already accept that humans are fallible. They build checks, reviews, and QA layers into processes precisely because humans are error-prone.

We double-handle work, create “second eyes” policies, and audit our own processes. Not because we love paperwork, but because we know we’ll stuff something up eventually.

I’ve spent years designing human-oriented processes saying “A human wouldn’t actually do that, would they” and inevitably having to eat my words within a few weeks of deployment.

AI, for all its quirks, doesn’t suffer Monday blues or post-lunch slumps. It doesn’t hold grudges or get flustered by passive-aggressive emails. It doesn’t ignore training sessions completely.

It simply applies the same fundamental probabilistic logic, at the same level, all day, every day.

Even if that logic is only 96 to 98% accurate against perfection, that can easily represent a 2 to 3% improvement gap versus humans which can translate into millions saved or earned.

Executives often hold AI to an impossible standard of zero mistakes. The irony? We’ve never been able to hold people to that bar.

A sales team can miss targets for months without anyone declaring the human model “not ready for production”. An algorithm misclassifies five out of a thousand entries, and suddenly we panic. It’s a phenomenom called ‘algorithm aversion’.

The fairer question isn’t “Is AI perfect?” but “Is it better than what we have now?”

For a sharper test, think in competitive terms. What happens when a rival runs 1–2 points more accurate than you for a year?

In trading, a 1% edge is gold dust. In healthcare, it’s lives saved. In operations, it’s fewer delays, happier customers, and staff who actually get to go home on time.

The Sweet Spot: Humans Plus AI

Of course, AI isn’t flawless. As a probabilistic system, it likely never will be flawless (unless paired with deterministic automation flows).

It has blind spots including limited context, ethical nuance, and sometimes, let’s be honest, a worrying confidence in its own nonsense.

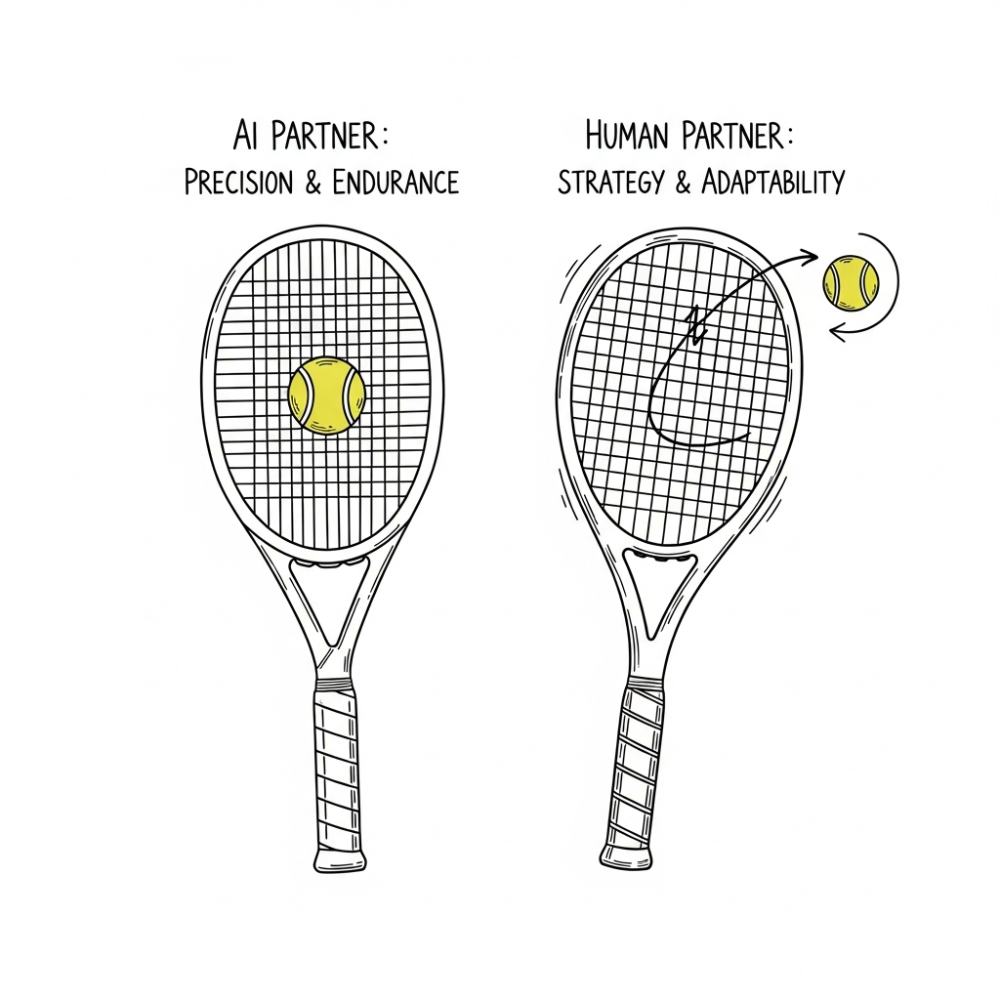

That’s where humans come in. People provide judgment, empathy, and the ability to say, “Hang on, that doesn’t feel right”. Let AI excel at providing relentless pattern-spotting, statistical rigour, scale, and an immunity to any forms of sickness.

Together, they form a hybrid system that’s closer to 100% reliable than either could manage alone.

Think of it like a really solid doubles team in tennis. The AI never tires, never blinks, and always returns the ball cleanly. The human calls the shots, adjusts the play, and makes the smart risks. Neither perfect, but together, hard to beat.

This concept is already proven in practice.

In chess, after IBM’s Deep Blue beat Garry Kasparov, a new form of play called “Centaur chess” emerged, where a human and AI team up against a 100% AI player. The result? Teams of a (even weak) human plus a machine have beaten even the strongest computers alone.

Kasparov noted that “Centaurs” absolutely kicked ass, outperforming either pure humans or pure AIs. The human brings strategic guidance and creative tactics, while the AI provides tactical calculation and error-free memory

Which brings me neatly onto competition and the factors that help us win business chess matches.

Enterprises love metrics. KPIs, SLAs, OKRs. We measure everything except, apparently, our tolerance for human error.

The moment we apply the same logic to AI, a fascinating shift happens.

If humans average 90 to 95% accuracy and AI clocks 97%, that’s not “imperfect” as would be reported internally. That’s better than the competition.

Holding out for perfect AI is like requiring zero defects in a factory with no tolerances. It simply isn’t going to happen. The knock-on effect is ultimately a lack of competitiveness against others in the market.

So, What Are We Really Afraid Of?

I believe the fear behind adopting imperfect AI boils down to accountability.

When a human makes a bad call, we know who to front up to. We can ask why, hear the story, weigh intent, and, if needed, escalate. When an AI makes the same mistake, the blame smears across vendors, models, training data, and a black box no one can look in. That invisibility makes the error feel colder and less fair, even if it happens less often.

It’s the same reason we aren’t seeing faster adoption of self-driving cars even if they are objectively safer.

So, we see AI projects paused or outright cancelled even though they would have provided measurable benefits. It’s an all-too-common issue

We all need to reframe how we approach AI. We need to become accepting of the fact that machines are quietly outpacing us in our own jobs.

It’s an uncomfortable truth, but a vital one to understand.

AI doesn’t have to replace us to outperform us. It just has to make fewer mistakes, more consistently, across more hours than we ever could. The sooner we stop comparing AI to some mythical flawless being and start comparing it to ourselves, the sooner we’ll see its real value.

If that all feels a little too ‘real’, here’s my optimistic take. AI gives us a chance to upgrade the human job, not erase it.

Let the machines grind through the repetitive, the high-volume, the stuff that punishes fatigue. Keep the messy calls, the empathy, the values, the trade-offs.

If that’s the split, productivity goes up, risk goes down, and we spend more of our time on work that actually feels like ours.

That’s a world I want to live in.

I’m David Bruce, and this is Optimistic Intelligence.

I write about AI from the perspective of someone who’s been consulting for some of Australia’s biggest businesses over the last 5 years (and another 5 at one the world’s biggest FMCG firms)… including the good, the bad, and the occasionally ridiculous.

The goal’s simple: cut through the hype and talk honestly about how AI is really showing up in work and consulting. This is a space for me to articulate the speed at which the world is changing around us, trying to wrangle the chaos and ground it in reality.

I aim to write one article a week, sometimes more, sometimes less.

If that sounds useful (or at least more interesting than another daily AI news summary email) hit subscribe and join me.